How AI Video Generators Like Sora Actually Work

The Four-Step Process Behind AI Video Generation

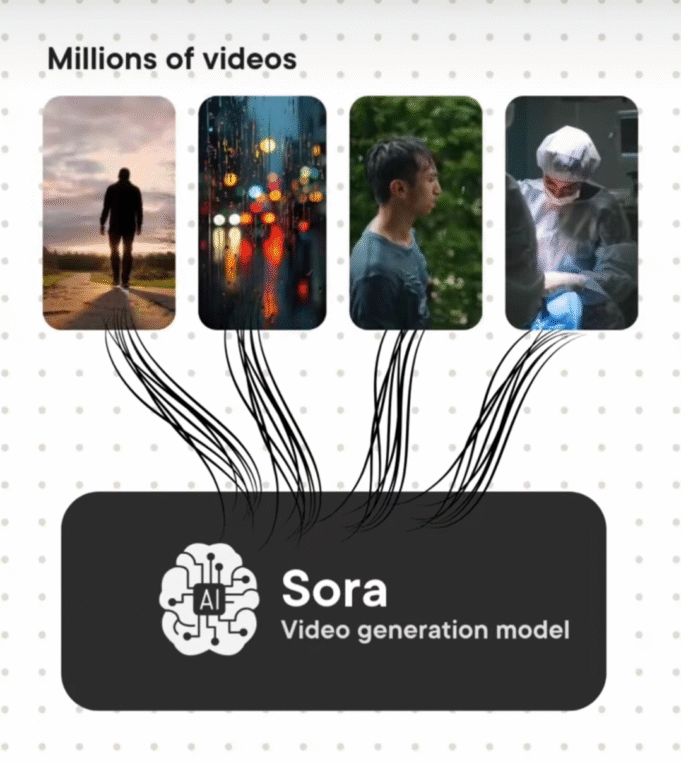

Step 1: Learning Reality Through Training

During training, Sora watches millions of hours of videos, but it doesn’t memorize pixels. Think of a master painter studying thousands of sunsets—they learn how light bends, how colors mix together, all the small details.

Sora does the same thing with motion and physics. It learns that smoke rises, water ripples, and clothes wrinkle. This compressed understanding is stored in latent space—the AI’s visual intuition. It’s not storing videos; it’s learning the rules of reality.

Step 2: Breaking Down Space-Time Into Patches

Still during training, Sora chops those videos into tiny space-time patches—like tiny Lego pieces that contain both what something looks like and how it moves.

Think of a sunset: one patch captures the yellow sky. The next patch, in time, captures how that yellow fades to orange. Each one of these Lego pieces is a token, like words to ChatGPT. The transformer architecture learns which visual moments naturally follow others, understanding the flow of reality.

Step 3: The Diffusion Process Sculpts Your Video

Now the model’s trained. You type your prompt—something like “woolly mammoth walking in the snow”—and Sora gets started. It generates blank static, like TV static.

Then it runs the diffusion process, and things start to happen:

- Step 1: Barely recognizable shapes emerge

- Step 10: Brown and white blobs start to appear

- Step 25: Those blobs develop fur textures

- Step 50: A fully formed mammoth walking in the snow

Essentially, it sculpts thousands of space-time patches (those Lego pieces), guided by the learning patterns from training. It’s like watching a photograph develop in reverse—starting with noise and gradually revealing clarity.

Step 4: Keeping It Real Across Time

A 60-second video is equivalent to thousands of frames, and each one must match perfectly. This is where the magic really happens.

The attention mechanism lets patches (those Legos) communicate across the entire video. When the mammoth’s left leg appears in Frame 100, those patches coordinate with Frames 200, 500, and 900—ensuring the same leg, same curve, same lighting stays consistent throughout.

This is what prevents the “AI weirdness” you see in older models where hands morph or objects disappear. The attention mechanism maintains temporal coherence across the entire generation.

What Makes Sora Different from Earlier AI Video Tools?

Sora represents a significant leap in AI video generation technology. Unlike earlier tools that struggled with consistency and realism, Sora can generate complex scenes with multiple characters, specific types of motion, and accurate details of both subject and background.

Key Advantages:

- Understands physical world physics (gravity, motion, lighting)

- Maintains consistency across longer video durations

- Handles multiple characters and complex interactions

- Generates videos up to 60 seconds with coherent narratives

- Automatically includes music, sound effects, and dialogue

How Long Does It Take to Generate a Video?

Once you submit your prompt, it typically takes up to a minute for Sora to generate the video. The processing time depends on video length, complexity, and resolution. You can verify the status of your video at any time through the interface.

What Can Sora 2 Do Beyond Text-to-Video?

Sora 2 introduces several advanced capabilities beyond simple text-to-video generation:

- Real-World Injection: Insert real people into AI-generated environments with accurate appearance and voice

- Video Remixing: Take existing videos and transform them with different styles or elements

- Cameo Features: Drop yourself into others’ AI-generated videos

- Collaborative Creation: Browse personalized feeds and remix trending creations

💡 The Technical Foundation

At its core, Sora uses a combination of diffusion models (which gradually denoise static into coherent images) and transformer architectures (which understand relationships between elements). Videos are generated in latent space by denoising 3D patches, then transformed to standard space by a video decompressor. This architecture allows it to understand not just what users ask for in prompts, but how those things exist in the physical world.

⚠️ Current Limitations to Know

While impressive, AI video generation isn’t perfect yet:

- Physics Inconsistencies: Complex physics (like glass shattering) can still look unnatural

- Fine Details: Small text, intricate patterns, or rapid movements may blur or distort

- Spatial Understanding: Left/right distinctions and precise object placement can be challenging

- Temporal Coherence: Very long videos may show subtle inconsistencies over time

- Access Limitations: Currently invite-based and limited to certain regions

🎬 Practical Applications

AI video generation is already transforming industries:

- Content Creation: Social media creators generating unique video content

- Advertising: Rapid prototyping of commercial concepts and storyboards

- Education: Creating visual explanations and demonstrations

- Entertainment: Pre-visualization for film and game development

- Product Design: Visualizing concepts before physical prototyping

The Future of AI Video

We’re witnessing the early days of a revolution in content creation. As these models improve, expect longer videos, better physics simulation, more precise control over output, and integration with other AI tools. The line between AI-generated and traditional video production will continue to blur, creating entirely new creative possibilities.

Stay Ahead of AI Innovation

There’s a lot more to it, but those are the main four steps on how these videos look so realistic. Want to stay updated on the latest AI breakthroughs and learn how to leverage them for your projects?

Key Takeaway: AI video generation isn’t magic—it’s a combination of massive training datasets, clever mathematical techniques, and architectural innovations. Understanding these four steps helps demystify the technology and reveals both its incredible potential and current limitations.