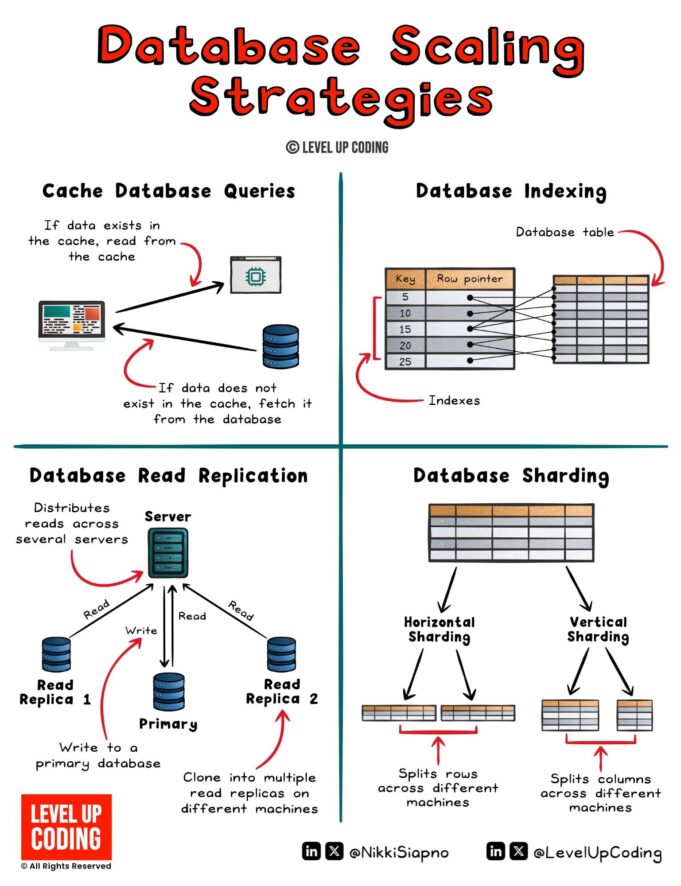

If you want to scale a database, your traffic patterns should guide your decisions. There’s no perfect answer, only trade-offs. Here are four core strategies ranked by implementation complexity and performance impact.

1. Cache Database Queries

One of the simplest improvements you can make to handle database load. Reduce load by caching frequently requested query results. Tools like Redis or Memcached store these results in memory, allowing your app to fetch data faster without hitting the database repeatedly.

Key Benefits

- 70% reduction in database calls (typical for session/query caching)

- Sub-millisecond response times vs. 50-200ms database queries

- Minimal code changes — often just adding a cache-aside pattern

| Tool | Best For | Typical Latency |

|---|---|---|

| Redis | Complex data structures, persistence | <2ms |

| Memcached | Simple key-value, multi-threaded | <2ms |

2. Database Indexes

Another straightforward strategy that delivers outsized performance benefits. Indexing accelerates data retrieval by enabling quick data location without scanning every row. Typically implemented with B-trees, indexes reduce data access time complexity from O(n) to O(log n). Significantly faster queries.

How It Works

According to Wikipedia’s B-tree explanation, instead of reading 14 disk blocks in a linear search, a B-tree index reads only 3 blocks. That’s a reduction from 150 milliseconds to 30 milliseconds.

B+ Trees in InnoDB maintain logarithmic time complexity O(log n) for searches, inserts, and updates by keeping the tree balanced and storing data only in leaf nodes.

Critical Considerations

- Index type matters: B-tree for range queries, Hash for exact matches

- Write overhead: Each insert/update must maintain index balance

- Storage cost: Secondary indexes in InnoDB duplicate the primary key

3. Database Read Replication

In read-heavy environments, replication may be the next best stone to turn. With read replication, you have a single database that you write to. It’s cloned into several (as many as you need) replica databases that you read from, with each replica database sitting on another machine.

When to Use

As one commenter noted: “Applying indexes” and “materialized views for complex joins” should often come before replication. Leader-follower replication shines when:

- Read traffic exceeds 70% of total queries

- Indexes and caching can’t reduce primary database load enough

- Geographic distribution of users requires low-latency reads

Trade-Offs

Replication introduces eventual consistency. As one user wisely observed: “Check how the team plans for coordination overhead as the system grows. Cross-node communication can dominate.”

4. Database Sharding

While the previous strategies focus on handling read load, sharding focuses on reads and writes. Sharding involves splitting your database into smaller, independent pieces (shards), with each handling a subset of the data.

This enables horizontal scaling by distributing the load across multiple servers. While powerful, sharding adds significant complexity to data management and query logic. Typically a strategy you only want to consider after exhausting other simpler solutions.

The Complexity Reality

InterSystems documentation notes that sharding scales query processing by executing queries in parallel, but according to CodeCalls, this introduces challenges:

- Cross-shard joins: Operations requiring data from multiple shards

- Distributed transactions: Maintaining ACID properties across shards

- Hotspot problems: The “Celebrity Problem” where one shard gets disproportionate traffic

- Rebalancing overhead: Moving data between shards as patterns change

When Sharding Makes Sense

Companies like Uber, Shopify, and Slack use sharding with Vitess at petabyte scale. But as DigitalOcean notes, many don’t need it:

“The time and resources needed to create and maintain a sharded architecture could outweigh the benefits.”

Consider vertical scaling first: Modern cloud databases can handle instances with 128 vCPUs and 4TB of RAM.

The Recommended Order

Based on community consensus and real-world experience, implement in this sequence:

- Database Indexes — Biggest gains, least effort

- Query Caching (Redis/Memcached) — Simple, dramatic impact

- Materialized Views — For complex joins (denormalization)

- Read Replicas — When reads explode

- Partitioning — Logical data separation within same DB

- Sharding — Last resort for true horizontal scaling

As SqlCheat’s 2025 guide emphasizes: Success depends on choosing the right sharding strategy… but only after simpler solutions are exhausted.