Google DeepMind unveiled AlphaEvolve, a Gemini-powered coding agent that autonomously discovers and optimizes algorithms by combining LLM creativity with evolutionary computation. Now available in private preview on Google Cloud, it’s already improved Google’s data centers by 0.7%, accelerated Gemini training by 1%, and broke a 56-year-old matrix multiplication record.

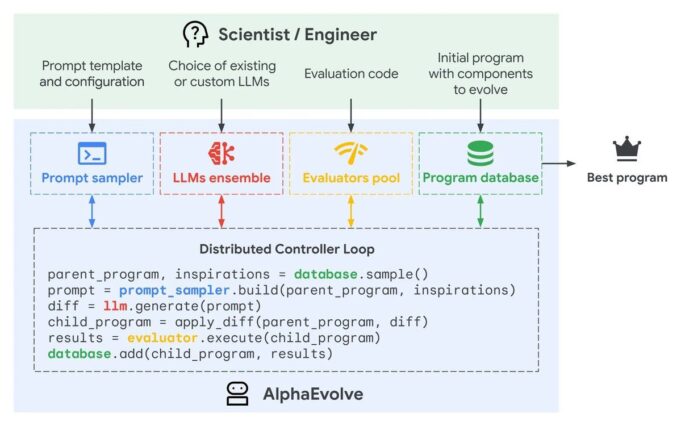

Unlike code assistants that write from scratch, AlphaEvolve iteratively improves existing algorithms through a four-step cycle:

- Prompt Construction: Samples high-performing solutions from a database, adds problem context, and builds targeted prompts

- LLM Ensemble: Gemini 2.0 Flash rapidly generates code mutations (breadth), while Gemini 2.0 Pro provides breakthrough insights (depth)

- Automated Evaluation: Each variant runs against user-defined tests, correctness checks, performance benchmarks, resource usage metrics

- Evolutionary Selection: Best candidates become “parents” for the next generation; the loop repeats until no improvement emerges

What Makes This Different From FunSearch

| Feature | FunSearch (2023) | AlphaEvolve (2025) |

|---|---|---|

| Scope | Single Python functions (10-20 lines) | Entire codebases (hundreds of lines, any language) |

| LLM Used | Codey (code-only training) | Gemini 2.0 Flash + Pro (frontier models) |

| Objectives | Single optimization goal | Multi-objective (speed, memory, accuracy simultaneously) |

| Sample Efficiency | Millions of LLM calls | Thousands (100-1000x fewer) |

| Evaluation Time | ≤20 minutes per test (CPU only) | Hours-long runs on GPUs/TPUs |

| Problems Solved | 4 mathematical challenges | 50+ math problems, 4 Google infrastructure optimizations |

There are reports online about AlphaEvolve being deployed internally across Google’s infrastructure with measurable gains:

Data Center Scheduling

- Developed heuristic recovering 0.7% of stranded compute capacity globally

- Equivalent to hundreds of thousands of machines reclaimed

- Reduces energy costs and carbon footprint at Google’s scale

Gemini Training Acceleration

- Optimized matrix multiplication tiling heuristics: 23% speedup on critical kernel

- Overall Gemini training time reduced by 1% end-to-end

- For models training on billions of dollars worth of compute, 1% = massive savings

TPU Hardware Design

- Simplified Verilog arithmetic circuits by removing redundant bits

- Maintained functional correctness through formal verification

- Integrated into upcoming Tensor Processing Unit chips

Breaking 56-Year-Old Math Records

AlphaEvolve discovered a method to multiply 4×4 complex matrices using 48 scalar multiplications, the first improvement over Strassen’s 49-multiplication algorithm from 1969.

Mathematical Achievements

- Tested on 50+ open problems in combinatorics, number theory, geometry

- Matched state-of-the-art solutions in 75% of cases

- Improved upon best-known constructions in 20% of cases

- Advanced the kissing number problem (11 dimensions): 593 spheres vs previous 592

Tutorial: How to Use AlphaEvolve

Step 1: Define Your Problem

Provide three components:

# 1. Seed algorithm (initial implementation)

def sort_array(arr):

# Current bubble sort - slow but correct

for i in range(len(arr)):

for j in range(len(arr) - i - 1):

if arr[j] > arr[j + 1]:

arr[j], arr[j + 1] = arr[j + 1], arr[j]

return arr

# 2. Mark sections for evolution

# EVOLVE-BLOCK-START

def sort_array(arr):

# AlphaEvolve will optimize this entire function

pass

# EVOLVE-BLOCK-END

# 3. Define evaluation function

def evaluate(candidate_sort_fn):

test_arrays = generate_test_cases() # Random arrays, edge cases

start_time = time.time()

for arr in test_arrays:

result = candidate_sort_fn(arr.copy())

if result != sorted(arr): # Correctness check

return {"score": 0, "valid": False}

runtime = time.time() - start_time

return {"score": 1 / runtime, "valid": True} # Higher score = fasterStep 2: Configure AlphaEvolve

On Google Cloud, set parameters:

- LLM balance: 80% Gemini Flash (fast ideas), 20% Gemini Pro (breakthrough thinking)

- Population size: 50-200 candidates maintained simultaneously

- Iterations: Run until 1000 generations with no improvement

- Evaluation budget: Max 10,000 evaluations (adjust based on compute costs)

Step 3: Launch and Monitor

# Google Cloud API call (simplified)

from google.cloud import alphaevolve

job = alphaevolve.create_job(

seed_code="path/to/seed.py",

evaluation_fn=evaluate,

evolution_blocks=["sort_array"],

config={

"flash_ratio": 0.8,

"population_size": 100,

"max_iterations": 1000

}

)

# Monitor progress

for generation in job.stream_results():

print(f"Gen {generation.num}: Best score {generation.top_score}")

print(f"Top algorithm preview:

{generation.top_code[:200]}")

# After convergence, retrieve final algorithm

final_algorithm = job.get_best_solution()Step 4: Validate and Deploy

- Review AlphaEvolve’s top candidates (human-readable code)

- Run extensive test suites beyond training evaluations

- Compare performance against baseline and competing implementations

- Deploy to production with monitoring for edge cases

Comparison to Traditional Optimization

| Method | Time to Result | Expertise Required | Solution Quality |

|---|---|---|---|

| Manual optimization | Weeks to months | Expert algorithm designer | High but limited by human intuition |

| Traditional genetic algorithms | Days to weeks | Evolutionary computation knowledge | Limited by mutation operator design |

| LLM code generation | Minutes | Prompt engineering | Inconsistent, prone to errors |

| AlphaEvolve | Hours to days | Define problem + evaluation | Often exceeds human experts |

What Problems Can AlphaEvolve Solve?

AlphaEvolve excels when three conditions are met:

- Automated evaluation: Performance can be measured programmatically (no human judgment needed)

- Code-based solution: Problem can be expressed as an algorithm

- Room for improvement: Current solutions are suboptimal

Ideal Use Cases

- Scientific computing: Molecular simulation, protein folding, numerical methods

- Infrastructure optimization: Load balancing, resource scheduling, routing algorithms

- Compiler optimization: Code transformations, register allocation, instruction scheduling

- Financial modeling: Portfolio optimization, risk calculation, high-frequency trading strategies

- Machine learning: Custom loss functions, data augmentation policies, neural architecture search

What It Cannot Do (Yet)

- Problems requiring human aesthetic judgment (UX design, art generation quality)

- Tasks without clear success metrics (creative writing, business strategy)

- Real-world physical experiments (robotics requires simulation for evaluation)

- Solutions needing domain knowledge not present in Gemini’s training data

How This Differs From “Regular” AI Coding

When you ask ChatGPT or Copilot to write code, they generate based on patterns seen during training. AlphaEvolve operates differently:

| Aspect | Standard LLM Coding | AlphaEvolve |

|---|---|---|

| Generation | One-shot completion | Iterative refinement over thousands of generations |

| Verification | None (user tests manually) | Automated evaluation of every candidate |

| Learning | Static (frozen weights) | Adaptive (learns what works for your specific problem) |

| Output | Plausible code (may or may not work) | Provably correct, optimized solutions |

| Scope | Writes new code from descriptions | Improves existing implementations |

Private Preview Access

AlphaEvolve is available on Google Cloud through invitation-only private preview. To apply:

- Visit Google Cloud Console → AI & Machine Learning → AlphaEvolve

- Submit use case description with problem complexity, evaluation approach, expected impact

- Priority given to academic researchers, critical infrastructure optimization, scientific discovery projects

- Selected users receive dedicated support from DeepMind team during preview phase

Pricing (Expected)

Not yet disclosed, but anticipate usage-based billing:

- LLM generation costs: $X per million tokens (Flash + Pro combined)

- Evaluation compute: Standard Cloud TPU/GPU pricing for test runs

- Storage: Minimal charges for population database and artifacts

- Likely discounted or free for academic/research use during preview

The Bigger Picture: Self-Improving AI

AlphaEvolve represents a profound shift, AI improving the systems that run AI itself. It optimized Gemini’s training kernels, meaning future Gemini versions benefit from AlphaEvolve’s work, which then make AlphaEvolve more capable, creating a positive feedback loop.

What This Means Long-Term

- Algorithm design democratized: Non-experts can optimize critical code paths

- Scientific discovery accelerated: Researchers focus on defining problems, not implementing solutions

- Compute efficiency gains: Billions saved industry-wide as algorithms improve without hardware upgrades

- Open problems solved: Decades-old mathematical challenges accessible to automated discovery

Open Source Alternatives

Following AlphaEvolve’s publication, the community developed OpenEvolve, an open-source implementation by Asankhaya Sharma supporting multiple LLM providers (OpenAI, Anthropic, local models) and distributed computation. While less polished than Google’s offering, it enables experimentation without Cloud vendor lock-in.

AlphaEvolve isn’t just another AI tool, it’s AI that makes other algorithms better. By pairing Gemini’s code generation with evolutionary search and rigorous evaluation, Google created a system that discovers solutions humans haven’t found in half a century. Already deployed across Google’s infrastructure with measurable ROI, it validates that AI agents can contribute genuine scientific and engineering breakthroughs, not just assist human work.

For organizations facing complex optimization challenges where traditional approaches plateau, AlphaEvolve offers a new path: define the problem clearly, let the system explore solution space autonomously, and deploy algorithms that outperform decades of human intuition.