What Is Amazon SageMaker Catalog Data Lineage?

Amazon SageMaker Catalog’s data lineage feature provides a clear view of data’s journey across AWS analytics services such as AWS Glue, Amazon EMR Serverless, and Amazon Redshift, eliminating the challenge of tracing data origins and transformations across different systems.

Prerequisites for Getting Started

Before using SageMaker Catalog, ensure the following prerequisites are met: an active AWS account with billing enabled, an AWS Identity and Access Management (IAM) user with administrator access or specific permissions, an S3 bucket (e.g., `datazone-{account_id}`), sufficient VPC capacity, and AWS IAM Identity Center setup.

Step-by-Step Guide to Visualizing Data Lineage

The process of visualizing data lineage involves several steps:

Step 1: Configure SageMaker Unified Studio with AWS CloudFormation

Launch the vpc-analytics-lineage-sus stack using the CloudFormation template. Necessary parameters include:

- DatazoneS3Bucket

- DomainName

- EnvironmentName

- PrivateSubnet CIDRs

- ProjectName

- PublicSubnet CIDRs

- UsersList

- VpcCIDR

This configuration process takes approximately 20 minutes.

Step 2: Prepare Your Data

Upload sample data files (attendance.csv and employees.csv) to the designated S3 bucket (e.g., s3://datazone-{account_id}/csv/).

Then, ingest the employee data from the employees.csv file into an Amazon RDS for MySQL table:

- Connect to the MySQL instance using the MySQL client

- Create a database named

employeedb - Create a table named

employeewithin that database

The employee table schema includes the following columns:

- EmployeeID (text)

- Name (text)

- Department (text)

- Role (text)

- HireDate (text)

- Salary (decimal)

- PerformanceRating (integer)

- Shift (text)

- Location (text)

Populate the employee table with data from the employees.csv file.

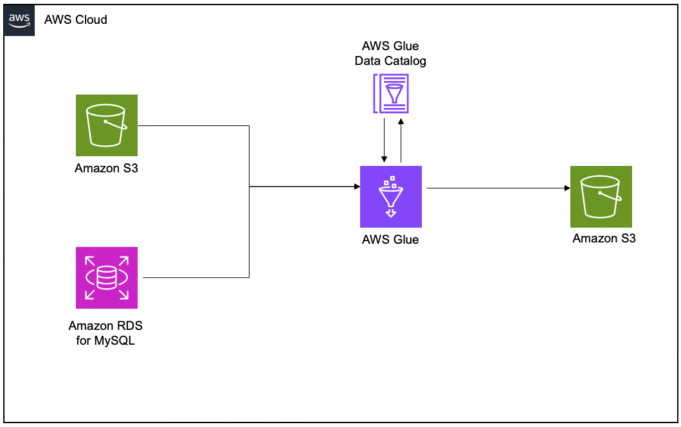

Step 3: Capture Lineage from AWS Glue ETL Job

Create a new AWS Glue ETL job and enable the “Generate lineage events” option, providing the appropriate domain ID.

Utilize the provided Python code snippet in the AWS Glue ETL job script. The script performs the following actions:

- Reads employee data from the MySQL database using JDBC connection details extracted from a Glue connection named “connectionname”

- Reads attendance data from the S3 bucket

- Joins the two datasets on “EmployeeID”

- Writes the resulting joined data as a Parquet file to an S3 location

- Saves it as a table named “gluedbname.tablename” in the Glue Data Catalog

Confirm the job runs successfully without any failures.

Step 4: Capture Lineage from AWS Glue Notebook

Create an AWS Glue notebook and use the provided code, ensuring to add the necessary Spark configuration to generate lineage.

The configuration includes specifying:

- OpenLineage Spark listener

- Amazon DataZone API transport type

- Domain ID

- AWS account ID

- Custom environment variables

- Glue job name

The Python code reads attendance data from an S3 bucket, writes it as a Parquet file to another S3 location, and saves it as a table named “gluedbname.tablename” in the Glue Data Catalog.

Step 5: Capture Lineage from Amazon Redshift

Create the employee and absent tables in Amazon Redshift.

Absent Table Schema

- employeeid (text)

- date (date)

- shiftstart (timestamp)

- shiftend (timestamp)

- absent (boolean)

- overtimehours (integer)

Employee Table Schema

- employeeid (text)

- name (text)

- department (text)

- role (text)

- hiredate (date)

- salary (double precision)

- performancerating (integer)

- shift (text)

- location (text)

Load data from the corresponding CSV files in Amazon S3 into these tables using the COPY command, specifying the IAM role for access. Finally, create a new table called employeewithabsent in Amazon Redshift by joining the employee and absent tables on the EmployeeID column.

Step 6: Capture Lineage from EMR Serverless Job

Create an EMR Spark serverless application with the specified configuration, which includes enabling Iceberg support.

Submit a Spark application to generate lineage events. The Spark application:

- Reads employee data from the MySQL database via JDBC

- Reads attendance data from the Redshift database via JDBC

- Joins the dataframes on the EmployeeID column

- Writes the resulting joined dataframe as a Parquet file to an S3 location

- Registers it as a table in the Glue Data Catalog

The aws emr-serverless start-job-run command is used to submit the Spark job, specifying:

- Application ID

- Execution role ARN

- Job name

- Job driver details including the entry point script

- Spark configuration parameters for executor and driver memory

- AWS Glue Data Catalog configuration

- OpenLineage listener settings

- DataZone API transport details

- Necessary JAR files for MySQL connectivity

Real-World Example: Employee Attendance Analysis

A practical example involves analyzing employee attendance data. SageMaker Catalog allows tracing raw data from an Amazon RDS for MySQL database and Amazon S3, transforming it through an AWS Glue ETL job, and ultimately creating a unified dataset in the Data Catalog.

Best Practices for Optimal Data Lineage

For optimal data lineage, consider these best practices:

- Automate lineage capture using SageMaker Catalog’s features

- Monitor data quality using lineage information

- Regularly update data sources to maintain accuracy

Next Steps: Explore and Learn More

Explore Amazon SageMaker and Amazon SageMaker Catalog documentation for comprehensive details and to start visualizing your data’s journey, unlocking new insights.