This incident underscores the critical need for vigilance when downloading and using AI-powered applications, even from seemingly reputable sources.

Two years ago, the launch of OpenAI’s GPT-4 API unleashed a torrent of AI-powered apps onto the App Store. From productivity boosters to virtual companions, developers rushed to capitalize on the AI hype. Many of these early entrants have since vanished, casualties of waning interest and Apple’s tougher stance on deceptive apps.

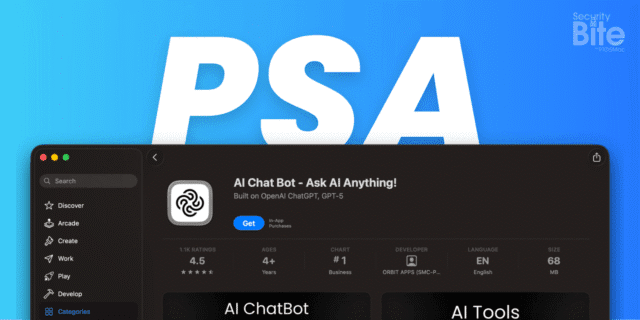

However, as security researcher Alex Kleber recently pointed out, some of these imposters are still slipping through the cracks. A prime example is an AI chatbot that brazenly mimics OpenAI’s branding and functionality, achieving surprising success in the Business category of the Mac App Store.

A Closer Look at the Clone

The app in question, simply named “AI ChatBot,” mirrors OpenAI’s visual identity, interface, and even its purported logic. Further investigation reveals a connection to another similar app. They share identical interfaces, screenshots, and a support website that directs users to a generic Google page. Both apps are linked to the same developer account and a company address in Pakistan.

Despite Apple’s efforts to purge copycat apps, these two have somehow bypassed the review process, securing prominent positions on the U.S. Mac App Store. This raises concerns about the effectiveness of app store security measures and the potential for similar scams to proliferate.

It’s easy to assume that an app’s high ranking or App Store approval guarantees its safety. However, this is a dangerous misconception. As a recent report highlights, many productivity apps exhibit troubling levels of data collection and a lack of transparency.

One particularly concerning example involved an AI assistant that secretly harvested far more user data than its App Store description indicated. While claiming to collect only messages and device IDs for functional purposes, its privacy policy revealed the collection of names, emails, usage stats, and device information – data often sold to brokers or used for nefarious purposes.

“Any GPT clone app that collects user inputs tied to real names is a recipe for disaster.”

Imagine a vast database of conversations, each message linked to a specific individual, residing on a vulnerable server managed by a shadowy entity with a dubious privacy policy. This is a chillingly realistic scenario.

Apple’s App Store privacy labels were introduced to empower users with information about data collection practices. However, these labels are self-reported, relying on the honesty of developers. This creates a loophole that unscrupulous actors can exploit, stretching the truth or outright lying about their data handling practices.

The reality is, Apple lacks a robust system for verifying the accuracy of these labels, leaving users vulnerable to deception.

The presence of these deceptive apps underscores a critical point: due diligence is paramount. Users must remain vigilant and question the legitimacy of AI-powered applications, even those found on trusted platforms like the Mac App Store.

The battle against AI-related scams is far from over. As AI technology continues to evolve, so too will the tactics of those seeking to exploit it. Staying informed and sharing awareness is the best defense against these ever-evolving threats.