Scale of the Breaches

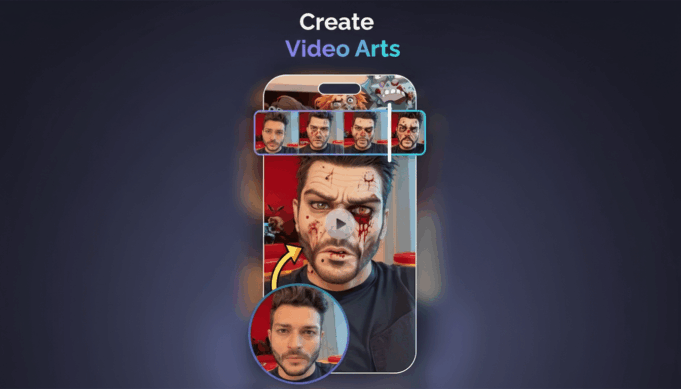

“Video AI Art Generator & Maker,” an app with over 500,000 installs, exposed more than 12 terabytes of user-uploaded media stored on misconfigured Amazon S3 buckets. Users’ personal photos and AI-generated content remained publicly accessible without authentication requirements.

IDMerit, a know-your-customer (KYC) verification app used for identity authentication, leaked sensitive documents including government-issued IDs, passports, full names, physical addresses, and facial recognition photos. The exposure occurred through improperly secured cloud storage that required no credentials for access.

Security firm researchers identified the vulnerabilities through routine scanning for exposed cloud storage endpoints and reverse engineering of APK files, which revealed plaintext API keys and storage bucket URLs embedded directly in application code.

Root Causes of Exposure

The primary vulnerability stems from developers hardcoding authentication credentials into compiled applications. When API keys and cloud storage access tokens are embedded in APK files, anyone with basic reverse-engineering tools can extract them and access the developer’s entire storage infrastructure.

Android application packages are not encrypted binaries. Tools like APKTool and JADX allow security researchers — or malicious actors — to decompile apps and view embedded strings, including cloud service credentials. Once extracted, these credentials grant access to storage buckets containing all user-uploaded data.

Additional security failures include disabled authentication requirements on cloud storage buckets, lack of encryption for data at rest, absence of access logging to detect unauthorized queries, and failure to implement time-limited signed URLs for content delivery.

Data Collection Chain

AI applications typically process data through multiple stages: users upload photos, videos, or documents; apps transmit this data to cloud servers for processing; AI models generate outputs stored alongside original uploads; and both input and output files persist in third-party storage indefinitely unless explicitly deleted.

Many apps claim on-device processing but still upload data for “model improvement” or analytics. The Data Safety labels on Google Play are self-reported by developers and not independently verified, allowing discrepancies between stated and actual data practices.

User Protection Measures

Security experts recommend evaluating developer credibility through company registration information, security contact availability, and update frequency before installation. Apps from established entities with documented security practices present lower risk than those from anonymous developers.

Users should review permission requests and grant only necessary access. Android’s permission system allows photo selection without full storage access, but many apps request broad permissions they don’t require for core functionality. The least-privilege principle applies: if an app functions with limited access, don’t grant more.

Sensitive documents like passports, driver’s licenses, and biometric data should not be uploaded to AI apps unless the service provider is a verified financial institution or government entity. Apps offering on-device processing with explicit data collection opt-outs provide better privacy protection than cloud-dependent alternatives.

Platform Response

Google operates the App Defense Alliance, which conducts security reviews before apps reach Google Play, but backend infrastructure misconfigurations fall outside the scanning program’s scope. The initiative focuses on malware detection and code analysis rather than auditing developers’ cloud storage configurations.

Google’s Data Safety program requires developers to disclose data collection practices, but enforcement relies on user reports rather than proactive verification. The company has removed apps following security disclosures but does not systematically audit cloud storage security for published applications.

The incidents highlight gaps in mobile app security where platform-level protections cannot prevent developer-side infrastructure failures. Unlike web services where browsers enforce same-origin policies and HTTPS requirements, mobile apps operate with broader system access once permissions are granted, making backend security failures more consequential.

Industry Pattern

Similar exposures have affected AI apps across categories including photo editing, face filters, document scanning, and voice synthesis tools. The pattern suggests many developers prioritize rapid feature deployment over security fundamentals, particularly in the competitive AI app market where time-to-market pressures discourage thorough security reviews.

Until app stores implement backend security auditing or developers adopt secure credential management practices like environment variables and secrets management services, users bear primary responsibility for evaluating app trustworthiness before sharing personal data with AI applications.

Follow Hashlytics on Bluesky, LinkedIn , Telegram and X to Get Instant Updates