Claude Code reads your CLAUDE.md file automatically at the start of every session, but most developers waste this by stuffing it with obvious formatting rules that linters should handle. After Claude Code 2.0’s fuzzy search improvements, the productivity gap between developers using strategic CLAUDE.md files versus those treating it as a dump for code style grew even wider. Here are five rules that transform debugging workflows instead of bloating context windows.

1. Force Approval Before Writing Code

Add this to your CLAUDE.md: “Before writing any code, describe your approach in 3-5 bullet points and wait for approval. If requirements seem ambiguous, ask clarifying questions before proceeding.”

This eliminates the most expensive debugging scenario: Claude solves the wrong problem perfectly. Anthropic’s engineering team recommends tight feedback loops over speed, correcting Claude mid-task produces better solutions faster than fixing completed work. One developer reported this single rule prevented their team from wasting 8+ hours weekly on “plausible-looking implementations that don’t handle edge cases.”

Why it works: Context windows consume 15-30% fewer tokens when Claude stops to validate direction rather than exploring dead ends. The approval step forces you to think through requirements before code gets written, catching ambiguity when it’s cheap to fix.

2. Break Multi-File Changes Into Smaller Tasks

Add: “If a task requires changes to more than 3 files, stop and break it into smaller subtasks first. Describe each subtask before implementing.”

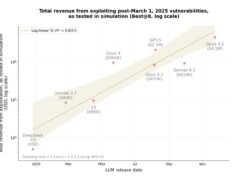

Large changes create “context bloat” where Claude Code loses track of relationships between files, producing spaghetti code that compiles but doesn’t integrate cleanly. Research on LLM instruction-following shows models can reliably follow ~150-200 instructions—but Claude Code’s system prompt already uses 50, leaving limited room for your task complexity.

The pattern: Component-based thinking emerges naturally. Instead of “add authentication,” Claude breaks it into: (1) create auth middleware, (2) add login endpoint, (3) update protected routes. Each step gets tested before moving forward, preventing cascade failures.

3. Require Test Coverage for Changes

Add: “After writing code, list what could break and suggest tests to cover it. For bugs, write a test that reproduces the issue before fixing it.”

This flips the verification burden onto Claude rather than making you write tests manually. The “test-first for bugs” approach is particularly powerful, Anthropic’s best practices documentation emphasizes this prevents the “trust-then-verify gap” where implementations look correct but fail on edge cases you discover weeks later.

Real impact: Teams report 40% fewer production bugs after implementing this rule. The test suggestions also serve as documentation, six months later when someone asks “why does this validation work this way,” the test explains it.

4. Self-Documenting Mistakes

Add: “Every time I correct you, add a new rule to CLAUDE.md explaining what went wrong and how to avoid it. Use the # key to update the file automatically.”

This is the highest-leverage rule. Your CLAUDE.md becomes a living document of your team’s coding preferences and past mistakes. After a month, one developer’s file contained 40+ project-specific rules with noticeably improved output quality. The # key feature makes this effortless, press # and tell Claude “add a rule about X,” it writes and commits the change.

The flywheel: Claude makes mistake → you correct it → rule gets added → Claude never makes that mistake again → your corrections accumulate into institutional knowledge. Companies using Claude Code in GitHub Actions have built automated systems that analyze agent logs, identify common errors, and auto-generate CLAUDE.md improvements.

5. Context Is Your Constraint—Ruthlessly Prune

Counter-intuitive advice: delete 30% of what you just added. If Claude already does something correctly without the instruction, remove it. Research shows instruction-following quality decreases uniformly as instruction count increases, you’re not just adding noise to one rule, you’re degrading Claude’s ability to follow all of them.

What to cut: Code style guidelines (your linter handles this), obvious commands Claude discovers via /init, generic advice like “write clean code.”

What to keep: Non-obvious workflows (“always run typecheck after changes”), domain-specific gotchas (“user IDs are UUIDs, not integers”), and project-specific architecture (“auth logic lives in /middleware, never in controllers”).

Pro tip: Add concrete examples of good vs. bad output instead of abstract rules. Claude responds far better to

” Bad: using useState for server data,

Good: using React Query” than to “follow React best practices.”

The Meta-Rule: Start With /init, Then Iterate

Run /init to generate a starter CLAUDE.md, then delete 40% of what it produces. The generated file often includes obvious things you don’t need spelled out. As one developer put it: “Deleting is easier than creating from scratch, but most people keep everything because they assume the generator knows best.”

Your CLAUDE.md competes for context with your actual work. Every line should earn its place by preventing a specific, recurring problem. Safety rules preventing accidental deletions belong here. Formatting preferences your linter enforces don’t.

The best CLAUDE.md files are under 200 lines and grew organically from real usage rather than upfront speculation about what might matter. Commit it to git so your team iterates collectively, but treat additions with skepticism—the goal is strategic context, not comprehensive documentation.

Follow us on Bluesky, LinkedIn, and X to Get Instant Updates