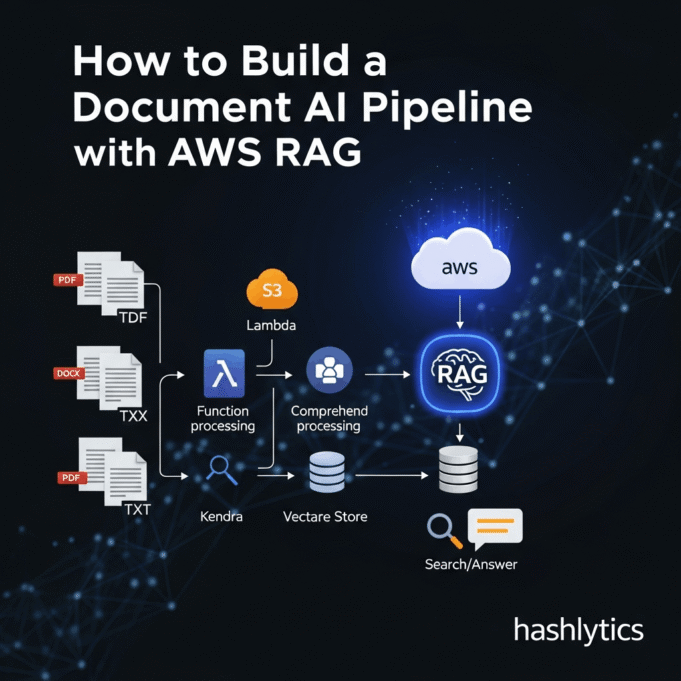

This approach uses AI agents to intelligently retrieve and process information from vast document stores, making enterprise data more accessible and actionable.

Set Up Your AWS Foundation

Start by creating an Amazon S3 bucket as your central document repository. Configure AWS Lambda functions to trigger automatically when files are uploaded, launching the pipeline without manual work.

import boto3

s3 = boto3.client('s3')

def lambda_handler(event, context):

bucket = event['Records'][0]['s3']['bucket']['name']

key = event['Records'][0]['s3']['object']['key']

# Retrieve document

doc = s3.get_object(Bucket=bucket, Key=key)

content = doc['Body'].read()

return process_document(content)Implement RAG for Intelligent Retrieval

RAG is the core of this system. Instead of feeding entire documents to a language model, RAG first retrieves the most relevant passages from your S3 library. This focused context reduces hallucinations and improves extraction accuracy.

from langchain.vectorstores import FAISS

from langchain.embeddings import BedrockEmbeddings

# Create vector store for document retrieval

embeddings = BedrockEmbeddings()

vectorstore = FAISS.from_documents(documents, embeddings)

def retrieve_context(query, k=3):

# Get top k relevant passages

docs = vectorstore.similarity_search(query, k=k)

return "\n".join([d.page_content for d in docs])Connect Amazon Bedrock for Processing

Integrate your RAG system with Amazon Bedrock to access foundation models. Bedrock provides access to various foundation models for summarization, extraction, or Q&A while managing infrastructure.

import boto3

import json

bedrock = boto3.client('bedrock-runtime')

def extract_with_bedrock(context, query):

prompt = f"Extract information from:\n{context}\n\nQuery: {query}"

response = bedrock.invoke_model(

modelId='anthropic.claude-v2',

body=json.dumps({

'prompt': prompt,

'max_tokens_to_sample': 500

})

)

return json.loads(response['body'].read())['completion']Deploy AI Agents for Automation

The final step implements agentic workflows. According to the guide from DeepLearning.AI, these agents act as autonomous workers that parse complex structures, extract entities, and answer queries, creating a fully automated extraction process.

By combining RAG with agentic workflows, this pipeline handles unstructured data far more effectively than traditional parsing. It creates a system that understands context, which is critical for enterprise applications.

Using managed AWS services ensures the pipeline is scalable, secure, and cost-efficient. This architecture provides developers with a repeatable blueprint for building document intelligence solutions, from internal search tools to compliance checkers, that process enterprise documents at scale.

Follow us on Bluesky , LinkedIn , and X to Get Instant Updates