Yelp, renowned for connecting users with local businesses, undertook a significant overhaul of its data infrastructure on AWS to address the challenges of processing vast amounts of user data in near real-time. The company’s adoption of a “streamhouse” architecture enabled sub-minute data freshness and substantial cost reductions. This transformation underscores the increasing importance of real-time insights for businesses that rely on immediate feedback and personalized user experiences.

Yelp’s previous data pipeline, while initially adequate, struggled to keep up with the company’s growth and evolving needs. Complex data governance, observability problems, and long data processing times hindered the system’s efficiency. GDPR compliance efforts revealed critical shortcomings, prompting a comprehensive re-evaluation of the infrastructure.

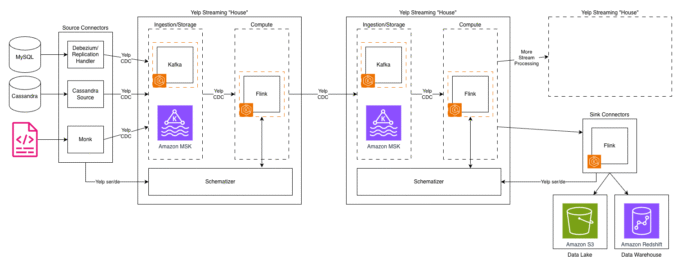

The original architecture, centered around Apache Kafka, became a bottleneck. The proliferation of Kafka topics led to lengthy processing chains and increased latency at each stage. Using Kafka for both ingestion and storage proved inefficient, as Kafka is optimized for high-throughput messaging rather than long-term data warehousing. The proprietary “Yelp CDC” format further added to the complexity, increasing the learning curve for new engineers and complicating integrations with off-the-shelf systems.

The evolution from data warehouses like Amazon Redshift and Snowflake to data lakes and now streamhouses reflects a continuous pursuit of the ideal balance between structure, flexibility, and cost. Data warehouses offered structure but lacked real-time capabilities. Data lakes provided flexibility but often lacked governance. Lakehouses, such as those built on Apache Iceberg and Delta Lake, aimed to combine the advantages of both approaches.

However, even lakehouses often treated streaming as an afterthought. Yelp required a solution that prioritized streaming while maintaining lakehouse economics.

The transformation involved three key shifts: decoupling ingestion from storage, unifying the data format, and implementing streamhouse tables. Decoupling ingestion from storage, with Flink CDC handling ingestion and S3 handling storage, resulted in an 80% reduction in storage costs. Migrating to the industry-standard Debezium format and replacing Kafka topics with Paimon tables further streamlined the architecture.

Key design decisions included adopting SQL as the primary interface, separating compute and storage, and embracing open-source standards. SQL democratized access to streaming data, while the separation of compute and storage enabled independent scaling. Embracing open-source standards reduced the maintenance burden and accelerated feature development.

The transition to the new streamhouse architecture followed a carefully planned path, encompassing prototype development, phased migration, and systematic validation. This ensured a smooth transition with minimal disruption to existing systems.

The migration strategy focused on a phased rollout, moving a vertical slice of data rather than the entire system at once. This approach allowed the team to build confidence and identify issues early. The rollout involved selecting a representative use case, re-implementing it on the new stack, shadow-launching the new stack in production, verifying the new deployment, and switching live traffic only after thorough validation.

The migration surfaced several technical challenges, including system integration, performance tuning, and data validation. Comprehensive monitoring was developed to track end-to-end latencies, and automated alerting was implemented to detect any degradation in performance. Data validation was particularly challenging, requiring custom tooling to verify ordering guarantees and data integrity.

The implementation delivered transformative results, including a simplified streaming stack, fine-grained access management, and built-in data management features. The transition also promises reduced operational costs and enhanced troubleshooting capabilities.

The transformation yielded valuable insights about both technical implementation and organizational change management. These lessons can benefit others undertaking similar modernization efforts.

Choosing battle-tested open-source solutions like Apache Paimon and Flink CDC proved wise. SQL interfaces democratized access to streaming data, and the separation of storage and compute unlocked cost savings and operational flexibility.

A phased migration reduced risk, and backward compatibility enabled progress. Investing in learning new technologies paid dividends through increased productivity and reduced operational burden.

Yelp’s transformation to a streaming lakehouse architecture has revolutionized its data infrastructure, delivering impressive results across multiple dimensions. By leveraging S3 and Amazon MSK, the company reduced analytics data latencies from 18 hours to just minutes while cutting storage costs by 80%. The migration also simplified the architecture, reducing maintenance overhead and improving reliability.

For organizations facing similar challenges, Yelp encourages exploring the streamhouse approach, particularly those leveraging cloud services and open-source technologies like Apache Paimon. Security best practices should be leveraged when implementing your solution.