Anthropic is acquiring Bun, the breakthrough JavaScript runtime, to further accelerate Claude Code’s growth. The deal, valued in the “low hundreds of millions of dollars” marks Anthropic‘s first-ever acquisition.

As one X commenter perfectly captured the tension: Anthropic just bought the runtime that powers their AI coding tool. Now they control both the AI that writes your code AND the infrastructure that runs it. This is either brilliant vertical integration or a terrifying consolidation of power.

The $1 Billion Milestone

Claude Code reached $1 billion in run-rate revenue just six months after becoming available to the public in May 2025. For context:

| Month | Anthropic Annual Revenue (Run-Rate) |

|---|---|

| January 2025 | $1 billion total |

| August 2025 | $5 billion total |

| October 2025 | $7 billion total |

| November 2025 | $1 billion from Claude Code alone |

Claude Code now represents a massive chunk of Anthropic’s revenue, with the rest coming from API customers using Claude Haiku/Sonnet/Opus for coding assistants.

Why Bun Matters

Bun had:

- 7.2 million monthly downloads (25% growth in October alone)

- Over 4 years of runway — they didn’t have to sell

- 82,000+ GitHub stars

- Zero revenue — avoiding “Bun tries to figure out monetization”

The Technical Advantage

Bun is dramatically faster than Node.js:

- Built on Zig + Apple’s JavaScriptCore (not V8)

- All-in-one: runtime, package manager, bundler, test runner

- Often 4x faster execution for JS/TypeScript apps

- Claude Code ships as a Bun executable — if Bun breaks, Claude Code breaks

One enthusiastic X commenter congratulated Sumner: Wow, congrats @jarredsumner! Amazing that you had such a huge impact on open source and still managed to get a great financial outcome for yourself as well. I use bun for all my JS projects!

Another added: Smart move, Bun’s speed and tooling could give Claude Code a real edge in developer workflows.

The Vertical Integration Debate

Why This Is Brilliant

- Anthropic controls the full stack: model + runtime + infrastructure

- Claude Code gets first-look at what’s coming in AI coding tools

- Bun gets resources to ship faster without VC monetization pressure

- Similar to Chrome/V8, Safari/JavaScriptCore, Firefox/SpiderMonkey relationships

Why This Is Terrifying

- One company now controls AI that writes code + infrastructure that runs it

- Lock-in risk: if Claude Code becomes essential, developers depend on Anthropic-controlled runtime

- Could marginalize competitors who don’t own their runtime

- Concentration of power in AI tooling ecosystem

What Bun Promises

From the official FAQ:

- Is Bun still open-source & MIT-licensed? Yes.

- Will Bun still be developed in public on GitHub? Yes.

- Does Bun still care about Node.js compatibility? Yes.

- Same team working full-time? Yes — now with premier AI lab resources instead of VC pressure

As Sumner wrote: “We didn’t have to join Anthropic. Instead of putting our users & community through ‘Bun, the VC-backed startup tries to figure out monetization’—thanks to Anthropic, we can skip that chapter entirely.”

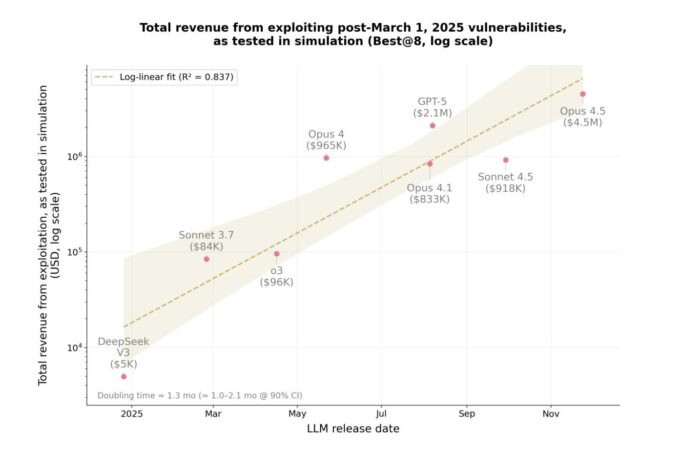

| Dataset | Contracts | Success Rate | Simulated Revenue |

|---|---|---|---|

| Full historical (2020-2025) | 405 | 51% (207/405) | $550.1 million |

| Post-March 2025 only | 34 | 56% (19/34) | $4.6 million |

| Live contracts (zero-days) | 2,849 | 2 novel bugs found | $3,694 |

One commenter noted a critical insight: “Look at this: each step is 10x more revenue, 10x. Models get better at cyber exploiting so fast that its performance here doubles every 1.3 months. I couldn’t believe it honestly.”

The SCONE Benchmark Question

One user asked: “What do you think of SCONE as a benchmark here? As a buyer I’m struggling to find a good measuring stick for competing vendors/models. Is SCONE something I should be using to evaluate commercial offers?”

What SCONE Measures

SCONE-bench (Smart CONtracts Exploitation) evaluates:

- Economic impact: Success measured in dollars stolen, not just bugs found

- Real exploits: 405 contracts actually hacked 2020-2025 across Ethereum, BSC, Base

- Agent capabilities: Long-horizon reasoning, tool use, error recovery

- Zero-day discovery: Can models find new vulnerabilities in live code?

Should You Use It to Evaluate Vendors?

Yes, with caveats:

- Good proxy for cyber capabilities (control-flow reasoning, boundary analysis)

- Dollar-based metrics provide concrete risk assessment

- Benchmark is now public — vendors can demonstrate scores

- Specialized to blockchain — may not generalize to all security contexts

- High scores could indicate memorization, not true reasoning

The Memorization Question

One researcher raised a critical concern: “Our A1 preprint came out in July, about 4 months before this, and explored basically the same idea. I am curious whether your team checked if the models memorise these attacks from training data (we did)? It would be nice if our earlier work could be acknowledged.”

Anthropic’s Answer

The team isolated 34 contracts exploited after March 1, 2025—past all models’ knowledge cutoffs. This design specifically prevents memorization. On this cleaner set:

- Claude Opus 4.5: 17 exploits, $4.5M

- Claude Sonnet 4.5 + GPT-5: 2 additional exploits, $100K+

- Total: 19/34 contracts (56% success rate)

Additionally, on 2,849 live contracts with no known vulnerabilities, both Sonnet 4.5 and GPT-5 found 2 novel zero-days worth $3,694—proof that models aren’t just recalling training data.

Real-World Example: The Balancer Exploit

In November 2025, an attacker exploited an authorization bug in Balancer to withdraw other users’ funds, stealing over $120 million. Anthropic’s agents replicated similar tactics, autonomously navigating control flows and weak validations.

The same core skills that enable smart contract exploitation—control-flow reasoning, boundary analysis, programming fluency—apply to traditional software too.

The Cost Economics

According to Cryptopolitan, GPT-5 scanned 2,849 contracts at an API cost of $3,476, finding exploits worth $3,694.

Net profit: $218 (or about $109 per zero-day found)

More importantly: token costs for exploits dropped 70.2% in six months, meaning attackers can now run 3.4x more attacks for the same budget.

The Defense Argument

Anthropic emphasizes dual use: “The same agents capable of exploiting smart contracts can be deployed to audit and patch vulnerabilities.”

From the report:

“We hope that this post helps to update defenders’ mental model of the risks to match reality—now is the time to adopt AI for defense.”

The full SCONE-bench dataset is now public to help developers test and patch contracts before live deployment.

On the Bun Acquisition

Anthropic now controls:

- The AI that writes your code (Claude)

- The infrastructure that runs it (Bun)

- $1 billion in Claude Code revenue (6 months post-launch)

Whether this is “brilliant vertical integration” or “terrifying consolidation” depends on whether Bun remains truly open-source and whether Anthropic uses this control responsibly.

On the Smart Contract Research

AI models can now:

- Exploit 50%+ of vulnerable contracts

- Find zero-days in live production code

- Double exploit revenue every 1.3 months

- Operate at net profit ($109 per bug found)

As one commenter observed: “Higher dots = more dangerous the model could be.”

For buyers evaluating vendors, SCONE provides a concrete, dollar-based metric for cyber capabilities—but should be supplemented with domain-specific benchmarks and memorization checks.

Both developments point to the same future: AI companies are consolidating infrastructure (Bun), while AI capabilities (smart contract exploitation) advance faster than defensive measures can keep up.